None. The prevalent ideas in ML are a) "training" a model via supervised learning b) optimizing model parameters via function minimization/backpropagation/delta rule.

There is no evidence for trial & error iterative optimization in natural cognition. If you'd try to map it to cognition research the closest thing would be behaviorist theories by B.F. Skinner from 1930s. These theories of 'reward and punishment' as a primary mechanism of learning have been long discredited in cognitive psychology. It's a black-box, backwards looking view disregarding the complexity of the problem (the most thorough and influential critique of this approach was by Chomsky back in the 50s)

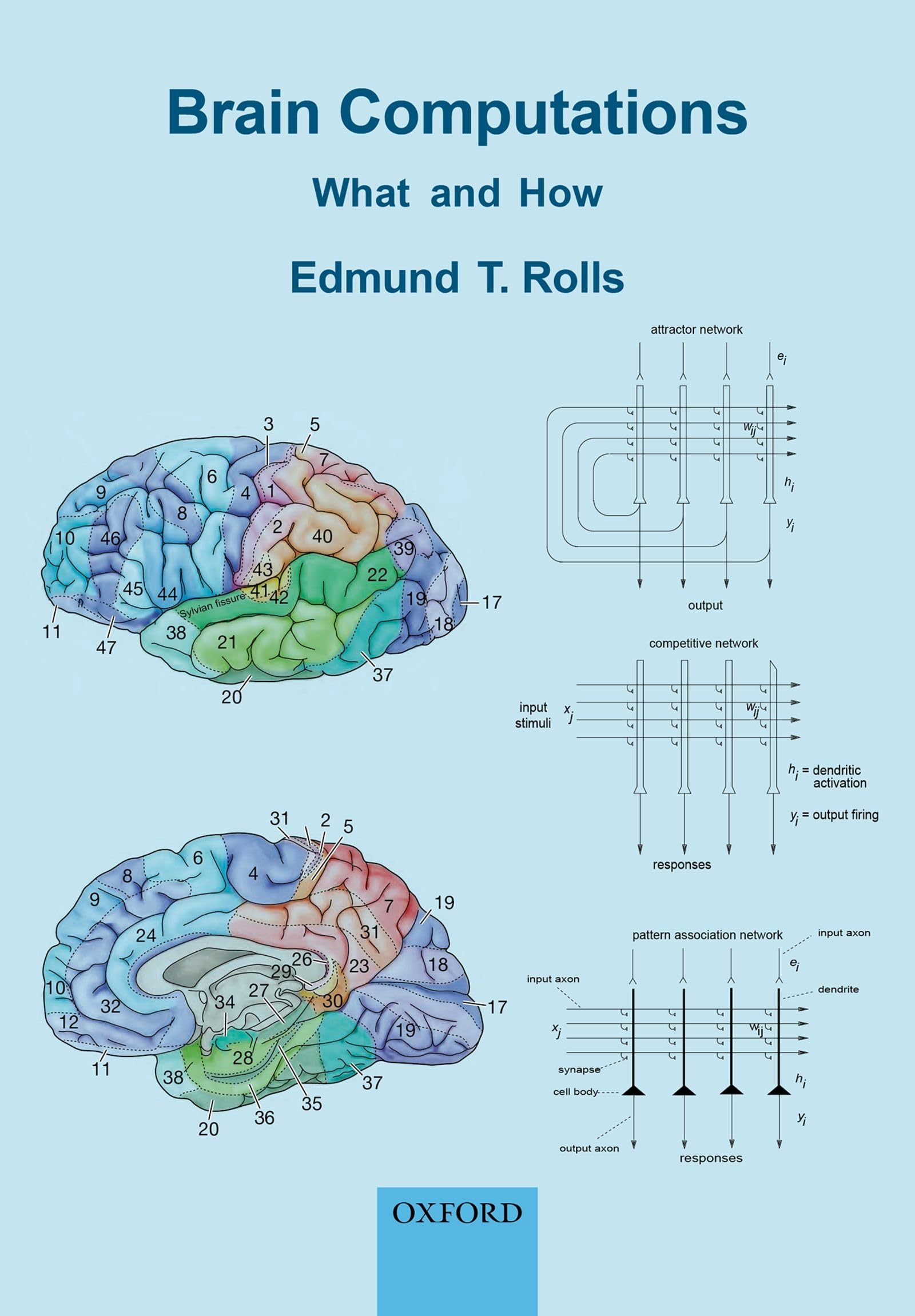

The ANN model that goes back to Mcculloch & Pitts paper is based on neurophysiological evidence available in 1943. The ML community largely ignores fundamental neuroscience findings discovered since (for a good overview see https://www.amazon.com/Brain-Computations-Edmund-T-Rolls/dp/... )

I don't know if it has to do with arrogance or ignorance (or both) but the way "AI" is currently developed is by inventing arbitrary model contraptions with complete disregard for constraints and inner workings of living intelligent systems, basically throwing things at the wall until something sticks, instead of learning from nature, like say physics. Saying "but we don't know much about the brain" is just being lazy.

The best description of biological constraints from computer science perspective is in Leslie Valiant work on "neuroidal model" and his book "circuits of the mind" (He is also the author of PAC learning theory influential in ML theorist circles) https://web.stanford.edu/class/cs379c/archive/2012/suggested... , https://www.amazon.com/Circuits-Mind-Leslie-G-Valiant/dp/019...

If you're really interested in intelligence I'd suggest starting with representation of time and space in the hippocampus via place cells, grid cells and time cells, which form sort of a coordinate system for navigation, in both real and abstract/conceptual spaces. This likely will have the same importance for actual AI as Cartesian coordinate system in other hard sciences. See https://www.biorxiv.org/content/10.1101/2021.02.25.432776v1 and https://www.sciencedirect.com/science/article/abs/pii/S00068...

Also see research on temporal synchronization via "phase precession", as a hint on how lower level computational primitives work in the brain https://www.sciencedirect.com/science/article/abs/pii/S00928...

And generally look into memory research in cogsci and neuro, learning & memory are highly intertwined in natural cognition and you can't really talk about learning before understanding lower level memory organization, formation and representational "data structures". Here are a few good memory labs to seed your firehose

https://twitter.com/WiringTheBrain

https://twitter.com/TexasMemory

https://twitter.com/ptoncompmemlab

https://twitter.com/doellerlab

See Professor Edmund T. Rolls books on biologically plausible neural networks:

"Brain Computations: What and How" (2020) https://www.amazon.com/gp/product/0198871104

"Cerebral Cortex: Principles of Operation" (2018) https://www.oxcns.org/b12text.html

"Neural Networks and Brain Function" (1997) https://www.oxcns.org/b3_text.html