Found in 2 comments on Hacker News

wanderr · 2013-10-30

· Original

thread

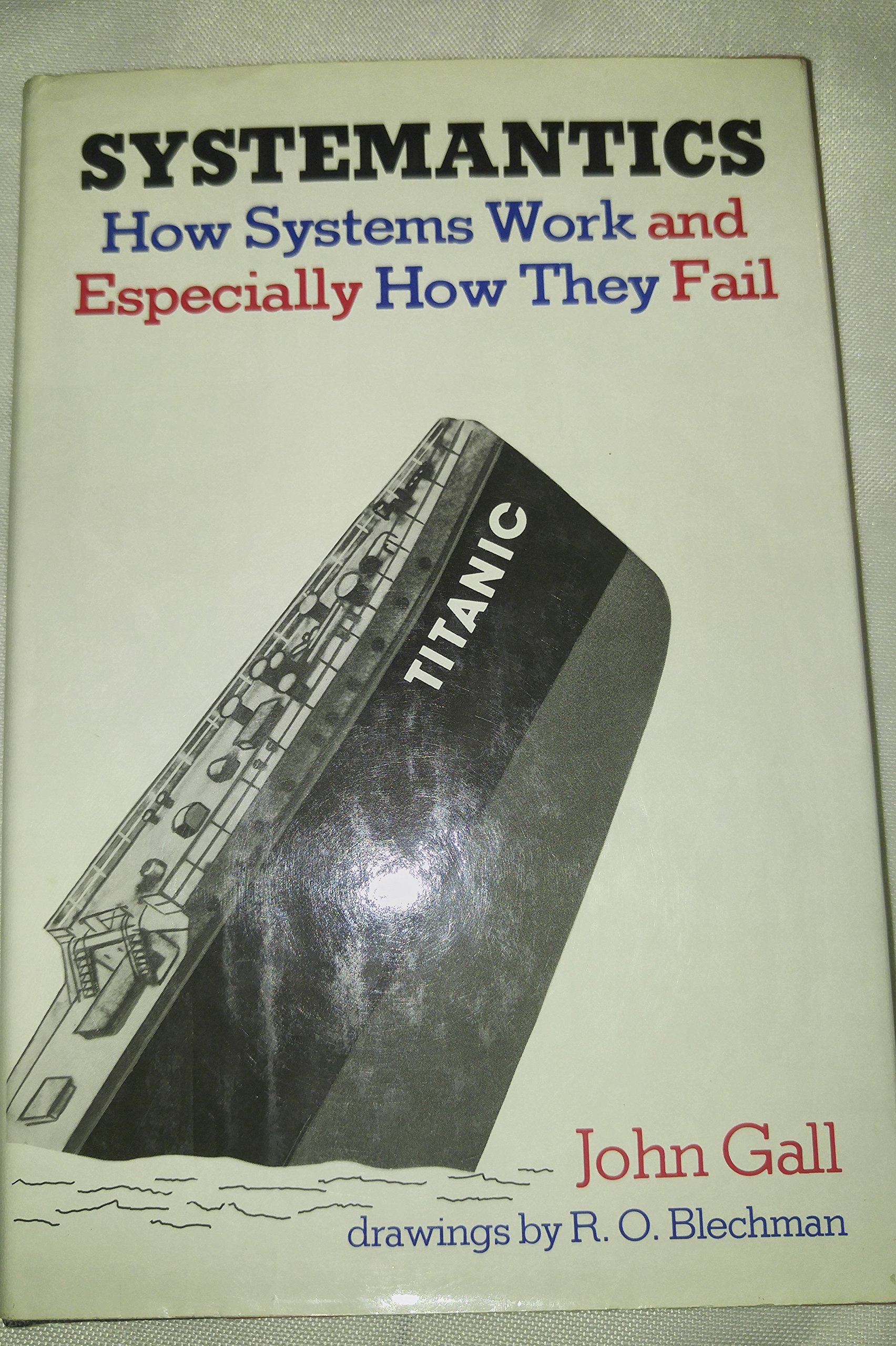

Reminds me of http://www.amazon.com/Systemantics-Systems-Work-Especially-T... "Fail-safe systems fail by failing to fail safe." - John Gall

It does remind me though of the book Systemantics: https://www.amazon.com/Systemantics-Systems-Work-Especially-...

One of the core thesis in the book is :

The system itself does not actually do what it says it is doing (The Operational Fallacy).

This people and engineers as well often forget. Just because the system says it does X does not mean it actually does it. People would do well to cultivate a healthy skepticism towards those complex IT systems. They might not fail most of the time but they inevitably will.