Oh, yeah, it was 1983:

https://www.amazon.com/Fifth-Generation-Artificial-Intellige...?

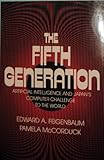

I finished a MSCS in 1985. Ed Feigenbaum was still influential then, but it was getting embarrassing. He'd been claiming that expert systems would yield strong AI Real Soon Now. He wrote a book, "The Fifth Generation", [1] which is a call to battle to win in AI. Against Japan, which at the time had a heavily funded effort to develop "fifth generation computers" that would run Prolog. (Anybody remember Prolog? Turbo Prolog?) He'd testified before Congress that the "US would become an agrarian nation" if Congress didn't fund a big AI lab headed by him.

I'd already been doing programming proof of correctness work (I went to Stanford grad school from industry, not right out of college), and so I was already using theorem provers and aware of what you could and couldn't do with inference engines. Some of the Stanford courses were just bad philosophy. (One exam question: "Does a rock have intentions?")

"Expert systems" turned out to just be another way of programming, and not a widely useful one. Today, we'd call it a domain-specific programming language. It's useful for some problems like troubleshooting and how-to guides, but you're mostly just encoding a flowchart. You get out what some human put in, no more.

One idea at the time was that if enough effort went into describing the real world in rules, AI would somehow emerge. The Cyc project[3] started to do this in 1984, struggling to encode common sense in predicate calculus. They're still at it, at some low level of effort.[4] They tried to make it relevant to the "semantic web", but that didn't seem to result in much.

Stanford at one time offered a 5-year "Knowledge Engineering" degree. This was to train people for the coming boom in expert systems, which would need people with both CS and psychology training. They would watch and learn how experts did things, as psychology researchers do, then manually codify that information into rules.[2] I wonder what happened to those people.

[1] http://www.amazon.com/The-Fifth-Generation-Artificial-Intell... [2] https://saltworks.stanford.edu/assets/gx753nb0607.pdf [3] https://en.wikipedia.org/wiki/Cyc [4] http://www.businessinsider.com/cycorp-ai-2014-7

The result was “Machines Who Think: A Personal Inquiry Into the History and Prospects of Artificial Intelligence” (1979), a chronicle of past attempts to mechanize thought.

I was first introduced to her name when I read a book she co-wrote along with Ed Feigenbaum - The Fifth Generation: Artificial Intelligence and Japan's Computer Challenge to the World[1]. It took me a while to later realize that she also wrote another book that was sitting on my shelf waiting to be read. That being Machines Who Think. I feel a bit sad now in acknowledging that the book is still sitting there waiting to be read. :-(

[1]: https://www.amazon.com/Fifth-Generation-Artificial-Intellige...